Sim2Real Grasp Pose Estimation for Adaptive Robotic Applications

1 Institute for Computer Science and Control, Hungarian Research Network, Budapest, Hungary

2 CoLocation Center for Academic and Industrial Cooperation, Eötvös Loránd University, Budapest, Hungary

3 Department of Manufacturing Science and Engineering, Budapest University of Technology and Economics, Budapest, Hungary

* Corresponding author: Dániel Horváth: daniel.horvath@sztaki.hu

|

|

|

|

|

|

|

Paper |

IFAC23 |

</Code> |

</ROS code> |

</Citation> |

Qualitative Evaluation

Abstract & Method

We propose two vision-based, multiobject grasp-pose estimation models, the MOGPE Real-Time (RT) and the MOGPE High-Precision (HP) accompanied with a sim2real method based on domain randomization for pose estimation (S2R-PosEst). With our sim2real method, we could diminish the reality gap to a satisfactory level.

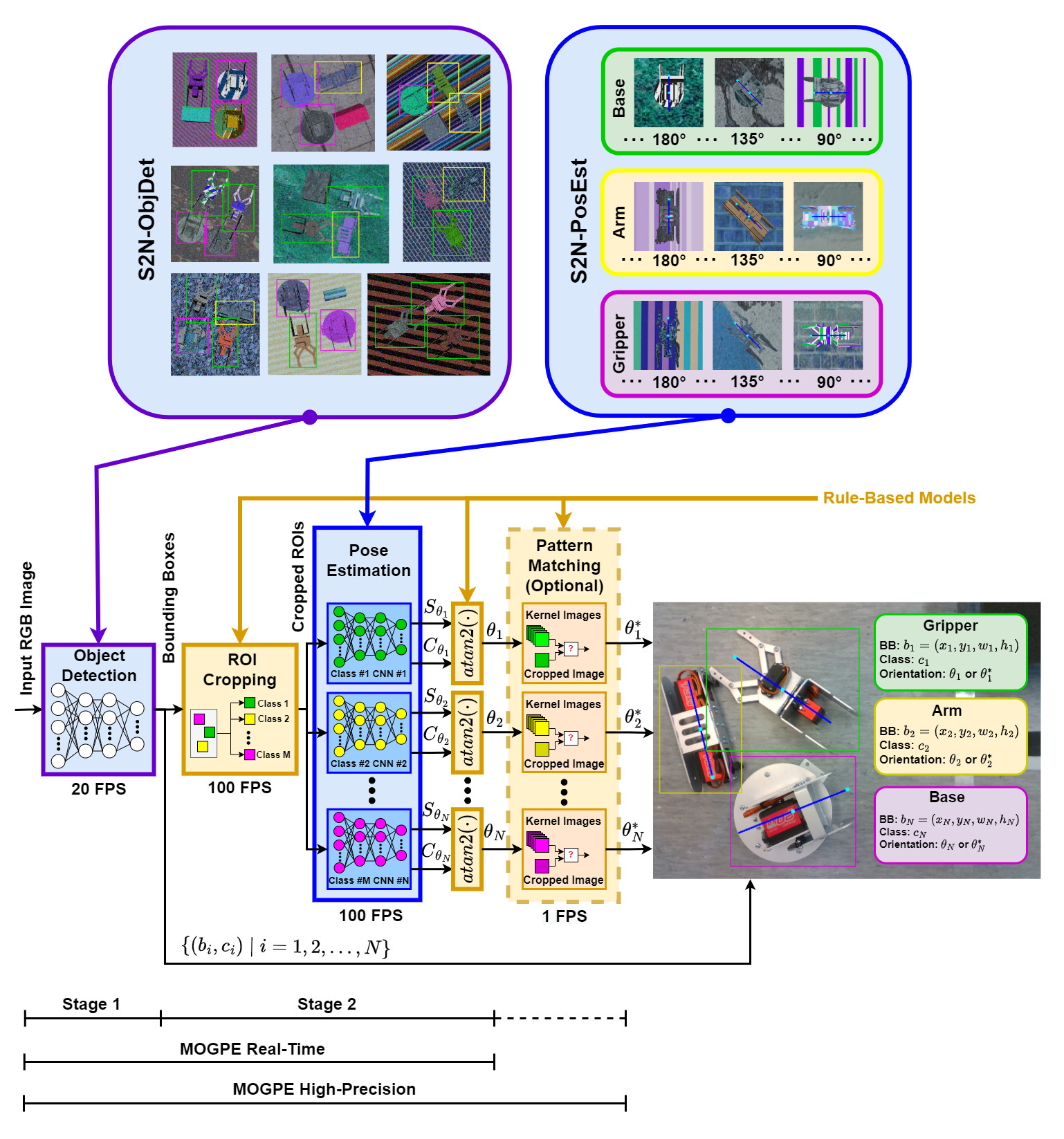

In our approach, we divide the problem into two stages. In the first stage, the different objects are localized (bounding box information with classification). In the second stage, the orientations of the detected objects are estimated with convolutional neural networks trained on class-specific examples. Our method is depicted on Fig. 1. The high-precision version contains a rule-based pattern matching phase which is applied locally, in the neighborhood of the estimated orientation. With this addition, the model achieves higher precision at the cost of the extra computation.

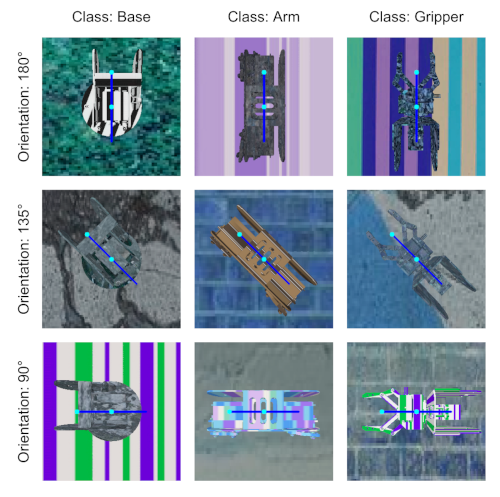

Generating sythetic data for the object detection (S2R-ObjDet) is covered in in our previous work. For generating synthetic data for the orientation estimation, the 3D model of the object is placed in the simulator and rotated around the z-axis (perpendicular to the plane where the object is placed) while random textures are added to the plane and to the object as well. For each bit of rotation, an image is taken and the label is automatically generated with it. Some examples can be seen in Fig. 3. The data generation lasts around 0.25 - 0.5s per image.

We yielded an 80% and a 96.67% success rate in a real-world robotic pick-and-place experiment, with the MOGPE RT and the MOGPE HP model respectively. Our framework provides an industrial tool for fast data generation and model training and requires minimal domain-specific data.

Fig. 1. Top. Illustration of our S2N-ObjDet and S2N-PosEst methods. Bottom. The flowchart diagram of our multi-object grasp pose estimation (MOGPE) methods.

Results

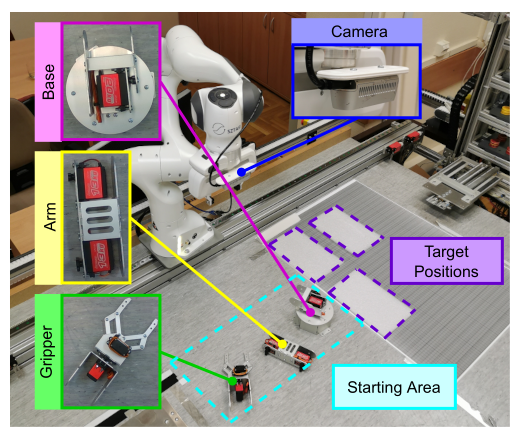

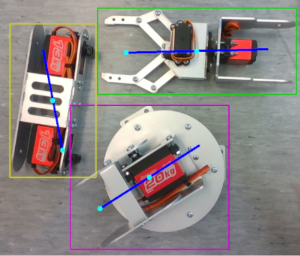

For evaluating our method, three industrial parts were selected, shown in Fig. 2. Furthermpre, some generated synthetic images are depicted in Fig. 3.

|

|

| Fig. 2. Experimental setup. | Fig. 3. Examples of the generated synthetic training dataset. |

To train the object detection model, 2000 synthetic images were generated alongside one real image (with all the 3 objects) multiplied 2000 times. The model achieved 98.78% mAP50 score with 0.1224% standard deviation on the real-world test set. To train the orientation estimation models, 4320 synthetic images were generated alongside 10-13 real images per class. The model achieved a 97.04% success rate with 4.18% standard deviation on the real test dataset. The orientation estimation is considered to be succesfull if it is within a range of 10 degrees.

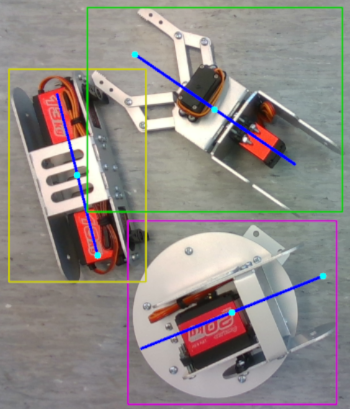

The qualitative results of the multi-object grasp-pose estimation is depicted in the following figures:

|

|

| Fig. 4. An accurate prediction. | Fig. 5. An inaccurate prediction. The orientation of the arm is slightly tilted. |

For quantitative evaluation, the robot needed to execute pick-and-place manipualtion tasks after each other. A pick-and-place operation is successful if the robot grasps the workpiece, transports it to the target position and manages to place it there. The experimental setup is depicted in Fig. 2. The results of the robotic experiment shown in the following table:

| Model | Base | Arm | Gripper | Success rate |

|---|---|---|---|---|

| MOGPE RT | 5/10 | 9/10 | 10/10 | 80% |

| MOGPE HP | 10/10 | 10/10 | 9/10 | 96.67% |

IFAC World Congress Presentation

Citation

@article{horvath_sim2real_mogpe_2023,

series = {22nd {IFAC} {World} {Congress}},

title = {{Sim2Real} {Grasp} {Pose} {Estimation} for {Adaptive} {Robotic} {Applications}},

volume = {56},

issn = {2405-8963},

doi = {10.1016/j.ifacol.2023.10.121},

url = {http://doi.org/10.1016/j.ifacol.2023.10.121},

number = {2},

urldate = {2023-11-30},

journal = {IFAC-PapersOnLine},

author = {Horváth, Dániel and Bocsi, Kristóf and Erdős, Gábor and Istenes, Zoltán},

month = jan,

year = {2023},

pages = {5233--5239},

}